posted 10-31-2010 01:15 PM

Ted,Jus thinkin'

I agree that ESS is important, if we are to be taken seriously as a science (the other choice would be to be taken seriously as an artform - but then we should plan on never using the words valid or reliable). Using ESS an examiner can easily answer the questions for which he would be stumped using other manual scoring models: what is the level of statistical significance of the manual score.

Of course, a good computer algorithm is designed and normed to calculate this type of answer - though some of our algorithms actually do not.

OSS-3 is the most powerful and complete algorithm available right now. It is based on decades of previous research and is premised on mathematical and statistical solutions to every problem encountered in polygraph scoring and decision making. What's more it is open source, completely documented, available cross-platform for the entire profession, and is free. In contrast, other algorithms all have shortcomings: are based on arbitrary assumptions, no statistical decision theory, lack documentation or any information about how they function, depend on proprietary unknown or un-validated features, or lack any statistical/mathematical decision model at all.

Choice seems simple to me.

Now, should you have an algorithm?

Yes.

Try going to court with nothing but your manual scores. Someone will show up with a computer score - even if it is the opposing counsel's expert.

You might as well get the best (most powerful and free) algorithm available and know in advance what it says.

If we want to be taken seriously as a scientific profession, we have to outgrow or fear of using scientific concepts to do our work. And we have to outgrow our fear of using computers to do what they are good at - fancy math.

If we cannot define and structure our manual scoring procedures to the point where they could be performed by a logical process of steps (i.e., algorithm) using statistical decision models, then we are not really talking science are we. We'd be back to that artform problem. (Try selling that for your entire career.)

If we were only interested in the polygraph for its confession-gaining value, then we should just stop requiring numerical scores and stop trying to teach test data analysis. Then we could stop the silly bid'ness of comparison questions. In the absence of numerical scores and statistical decision models, you just ask the questions and decide how you FEEL about how the data or how the examinee looks.

Of course, it's not science at all this way, and our expertise would be limited to "expertise at being an expert" - which is really just grandiose narcissism that is lacking in scientific substance.

Better solution: use a good algorithm, with complete documentation of its statistical model, and evidence to support its effectiveness. Start to learn what the algorithm actually does and how it works. Then: use a manual scoring protocol based on scientific methods and scientific decision theory.

Remember: in science there is no such thing as a perfect test, and there is always some uncontrolled variance or "error variance" (what we call sh%^& happens). If you know how your manual scoring model works, and if you a little about know how your algorithm works, then you will find that you can determine the reasons for those occasional disagreements. Usually there is something odd about the data.

Of course, never try to go to court with the wrong case (one indicator of "wrong case" might be when your manual and algorithm scores do not agree - there will be obvious disagreement over your results in court when this occurs). Most of the time the results of an algorithm and a empirically derived and evidence based manual scoring model will agree.

The point is that if you try to argue validity (and where else besides court would a real argument occur) you should have both a manual score and an algorithm. If you do not have an algorithm score then your opposing counsel will have one and you will feel like you got caught with your pants down and just stepped on your junk. You should also use an algorithm with complete documentation, for which you are allowed to understand how it works. You should not attempt to use an algorithm for which you cannot understand wtf it does - either because of no documentation, proprietary secrecy, or because there really is no statistical decision model (i.e., no statisical descriptors of the normative data including mean, variance and parametric shape). Whether you actually understand OSS-3 or not is of secondary importance. The fact that you are permitted to understand it, if you choose, will still help you - because you can easily contact someone who can have that conversation instead of you. With proprietary, undocumented, and non-statistical sorting algorithms you nobody could answer the questions of the opposing counsel's scientific minded expert, regarding the level of statistical signficance, how it is calculated and inferential probability of errror (sensitivity, specificity, FP and FN rates and the statistical confidenc intervals around these).

It does not matter that we may not ever plan on going to court with most cases. Polygraph, at its core, is supposed to be about truth, ethics and science. Conducting exams that do not attempt to meet the highest standards is unexcusable. We now know that it is easy to conduct a test of the highest quaity - just use an evidence based scientific approach. In other words: do what the science says and be wary of unproven or unstudied theories, complex ideas without evidence, and any proprietary or boutique ideas that belong to one individual. Science belongs to the profession. The issues are so many and so complex that one person will not have all the answers. Instead, we will have to rely on all of the smart people who took the time to think up and idea and study it - having the courage to discard things that do not work. We have been at this long enough now that we have many answers, if we put the pieces together.

If we do not take the time to learn about how the science behind the polygraph, then our expertise will be limited to being expert at using a checklist - which is not expert at all. Even the box-boys can use a checklist. And if we think that our expertise is limited to interrogation and gathering information during the interview, then try to do that without an accurate test result - it is the scientific accuracy of the test that improved the effectiveness of our interrogation, and establishes an ethical basis for our assumptions and accusations of guilt. No polygraph test and you are just another interrogator without a polygraph. No polygraph accuracy and we are fakes.

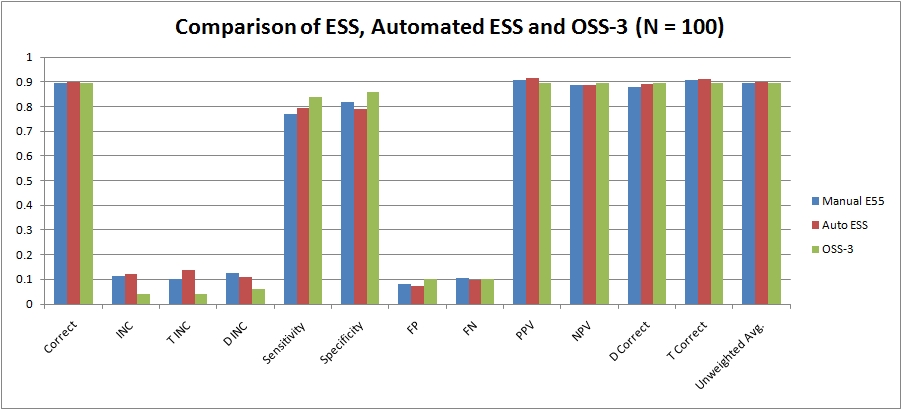

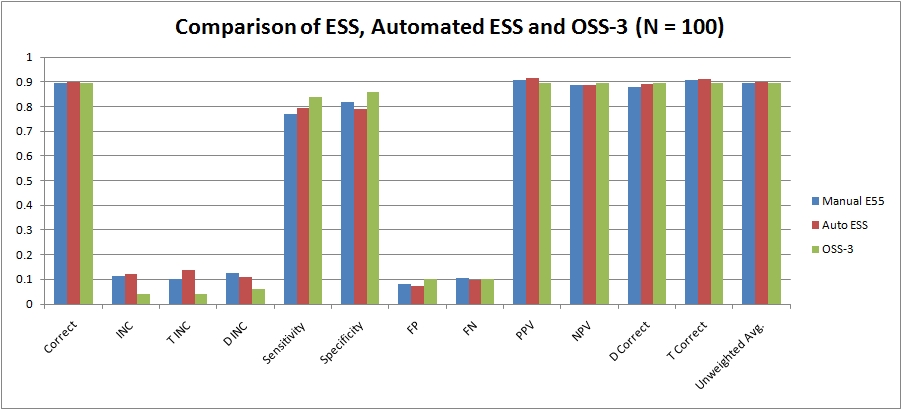

Here is a bar-chart comparison of the ESS along with an automated version of the ESS (automated measurements and automated structured decision rules) and the OSS-3 algorithm.

What you should start to notice is that if we are taking a scientific approach to the polygraph - test administratio and test data analaysis, there will inevitably begin to be much less difference in the statistical and and decision theoretic concepts that we use. The computer can do some fancy things for us. Our job is to understand that and exploit it.

ESS is designed to be an entirely scientific and evidence based appraoch to manual test data analaysis. Just remember that it is not new, and it is not something we just made up. It is based on decades of previous research by everyone you already know about: Backster, DACA/NCCA, Barland, Kircher, Raskin (and other Utah scientists), Senter, Krapohl and more. It just happens to also be simple, reliable, and easy to learn and use.

'nuff for now.

Peace,

r

Polygraph Place Bulletin Board

Polygraph Place Bulletin Board

Professional Issues - Private Forum for Examiners ONLY

Professional Issues - Private Forum for Examiners ONLY

Scoring Agorhythm

Scoring Agorhythm